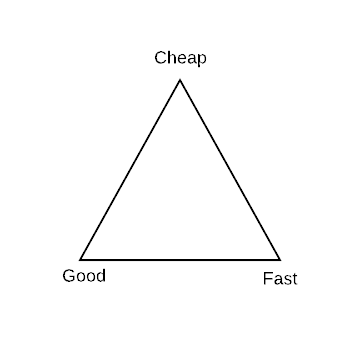

The age-old knowledge we have that when building a product, you can choose two of the three – Cheap, Good and Fast.

So if you need something to built with good quality and fast speed, it won’t come cheap.

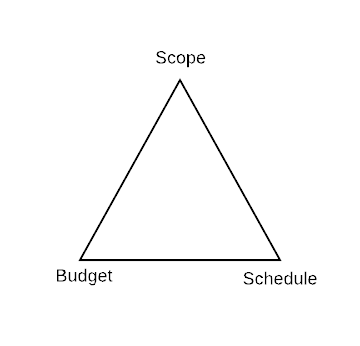

In terms of a software project, these constraints take the form of –

Scope

Budget

Schedule

Quite often, a project manager is caught in the dilemma of controlling these aspects of the project. Striking a good balance among three is often difficult.

Let’s take a look at some of the practices we can use to manage these three aspects.

Scope

In a traditional project management approach, you would use Work breakdown structure (WBS), to define feature components which we need to develop. If a change comes in at a later point of time, that has to go through a Change Control Board (CCB), which will analyze the impact and approve if required.

In a lean project management approach, the scope is controlled in the form of tickets and requests.

In the more popular agile project management approach, we control scope in terms of the product backlog and sprint backlog.

Schedule

To manage the schedule in a traditional waterfall approach, techniques like Program Evaluation and Review Technique (PERT) and Critical Path Method (CPM) are used

In the Lean project management approach, Kanban & Queues are used to manage the work. The work is managed in a list and executed based on priority. Service Agreements sets the priority of work by defining what is critical, major, or minor.

In an Agile project management approach, a Sprint based model is popular to manage scope, where releases and roadmaps are used to set goals for major features to be released together.

Budget

In the traditional project management approach, we use Earned Value Management (EVM) approach which compares current performance Earned Value (EV) to the Planned Value (PV) and evaluate it over Actual Cost (AC) which is the cost so far to complete the work

In the Lean project management approach, we control KPIs or Key performance indicators to showcase the outcome of the work done.

In Agile project management, we measure Return on Investment or ROI, and Burndown charts to measure performance.