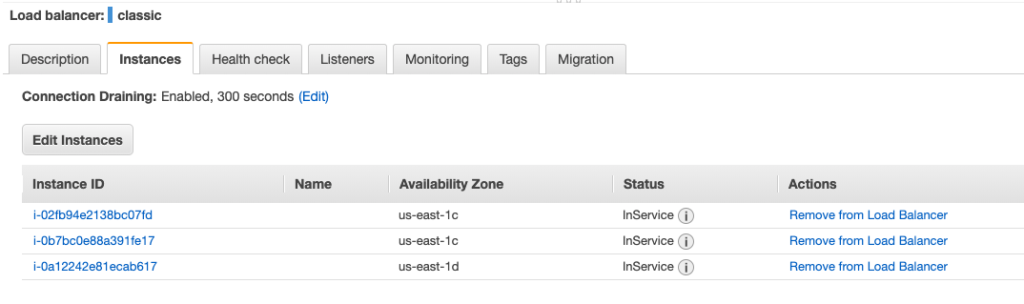

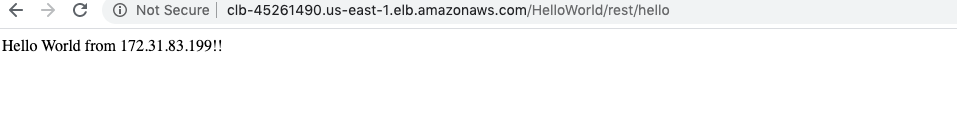

In the last few posts, I have talked about how to host your application on EC2 instance, how to create a replica of application instances, and load-balancing them behind a classic load balancer. The load balancer did help us distributing load among various instances of the application, but that approach had a serious drawback, i.e., you had to have all the instances of application up and running beforehand. We have not thought about money we would be paying extra even if the application load is too low or a situation where our instances will choke when the load is too high.

Autoscaling comes to rescue in such a situation, as the name suggests, you can tell AWS to scale up or down the application instances based on the load coming in for the application. Let’s take a look at how this can be achieved.

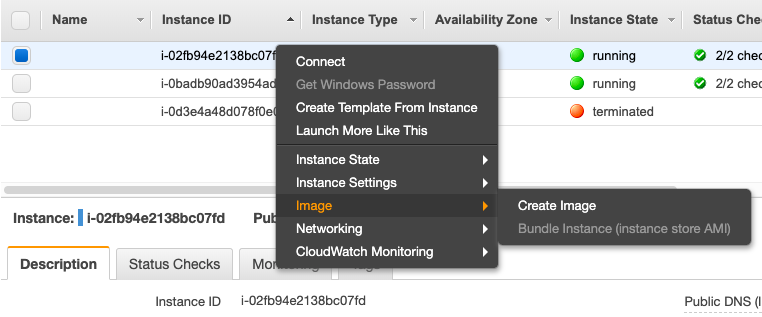

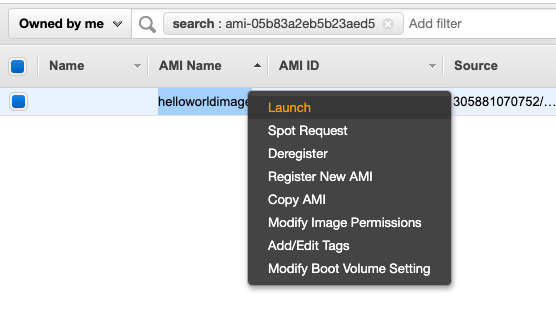

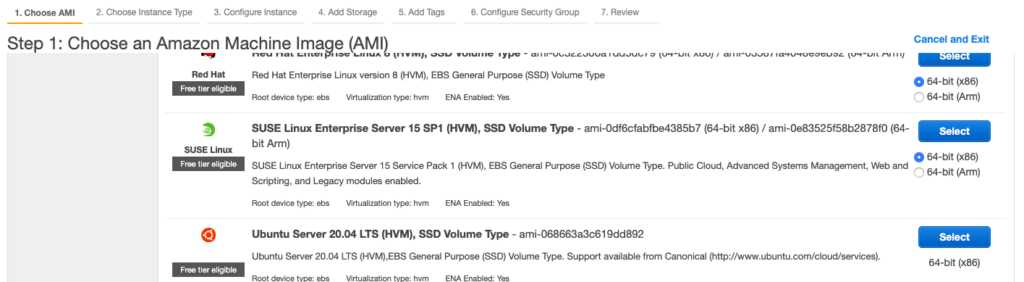

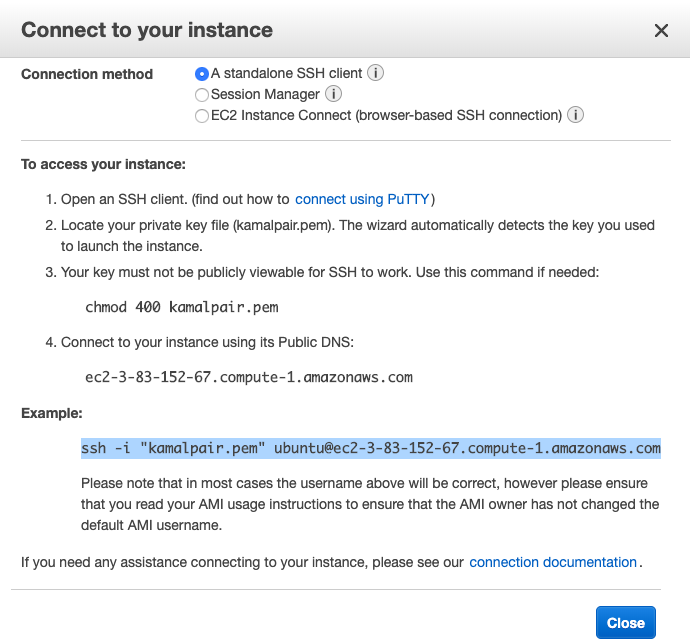

To start, go to the “Auto Scaling Groups” option and select the “Create Auto Scaling Group” button. It will ask you to create a Launch Configuration first. This is nothing but a configuration to launch your EC2 instance. Similar to the way we created a replica of EC2 instance, we will select all the options, the only difference is that we are not actually launching an instance at this point, we are just creating a configuration, which will be used by Auto Scaling to create a new instance. Make sure you select AMI, machine type, security group, and key-pair care full to avoid any surprises.

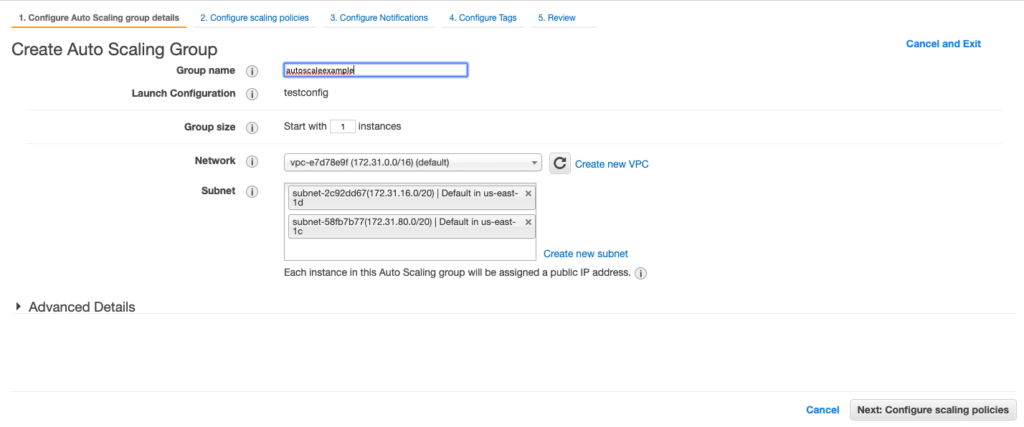

Once you will create and select launch configuration, you will be redirected to create an autoscaling group.

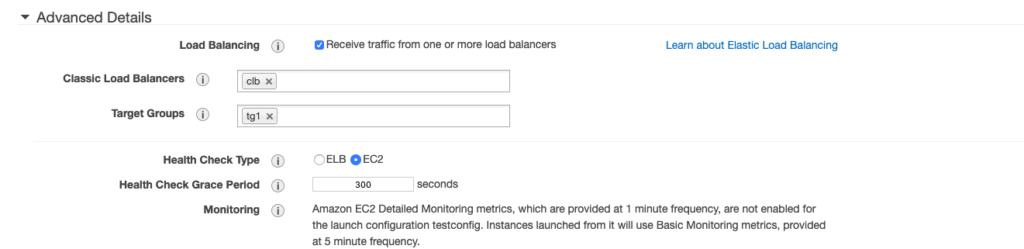

Also at this point, you can specify if your application instances are being accessed through a load balancer.

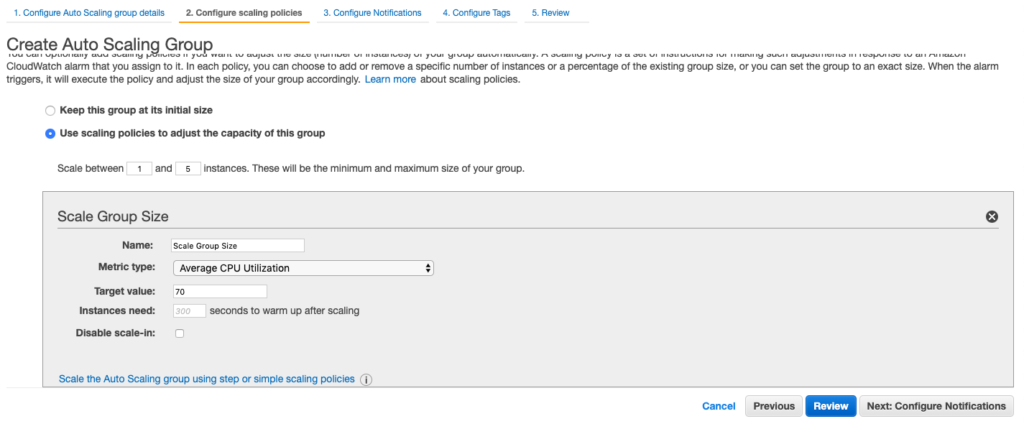

Next, you can select scaling rules. For example, I can say that a minimum number of instances I want for autoscaling is 1 and the maximum it should go up to is 5. Also, I can say that scale up or down based on a rule that CPU utilization is on average 70%, that is if it goes up to create a new instance and if it goes down, kill an instance, of-course max and min limits will be honored.

You can leave all other options as default or provide settings if needed. Once creates, now your autoscaling group takes care of handling the load for your application, spawning new instances whenever needed maintaining cost-load balance for you.